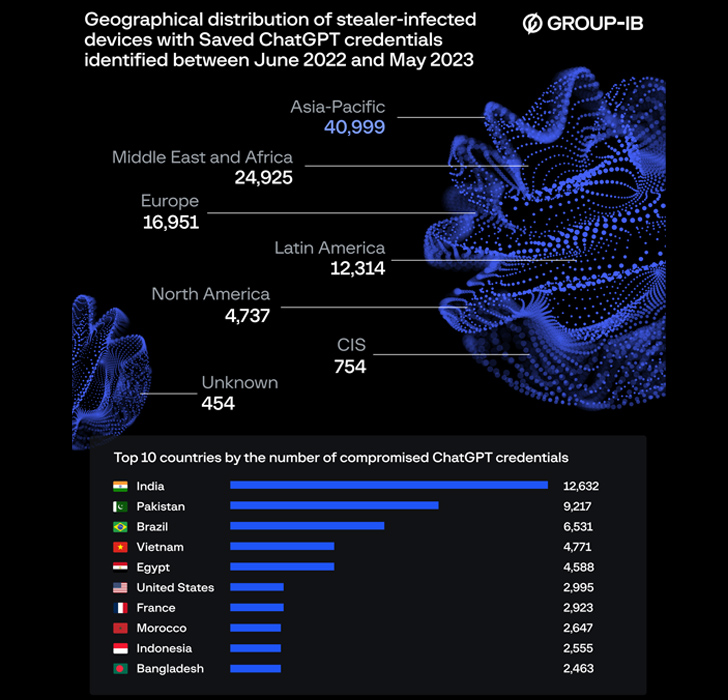

In a significant breach of cybersecurity, over 101,100 compromised account credentials of OpenAI’s ChatGPT were identified on illicit dark web marketplaces between June 2022 and May 2023. The global scope of the breach is highlighted by the fact that India alone accounted for 12,632 of these stolen credentials. This year-long series of breaches has raised serious concerns about data protection and cybersecurity measures in the AI industry.

Additionally, Group-IB’s report sheds light on the regional distribution of the breach. Nearly 17,000 European ChatGPT users fell victim to the breach, their account details stolen from devices infected by the Raccoon info stealer. Notably, India was a significant target, with a total of 12,632 stolen credentials traced back to users in the country.

The Asia-Pacific region suffered the most severe impact, with approximately 41,000 stolen accounts. Among the countries in this region, India was the hardest hit, accounting for over 12,600 of these compromised accounts. The breadth of the breach underscores the global reach of the attack and the extent of its implications.

How did it happen?

The breach of ChatGPT’s system was a calculated attack executed by the notorious Raccoon info stealer, according to the analysis conducted by Group-IB’s Threat Intelligence team. Group-IB maintains one of the industry’s largest libraries of dark web data, and their real-time monitoring of cybercriminal forums, marketplaces, and closed communities helped identify the compromised ChatGPT credentials.

Info stealers are a particular type of malware, highly effective at collecting various sensitive data such as credentials saved in browsers, bank card details, crypto wallet information, cookies, browsing history, and more. They can also harvest data from instant messengers, emails, and gather comprehensive information about the victim’s device. Operating non-selectively, these malware types aim to infect as many computers as possible, usually through phishing or other deceptive tactics, in order to amass as much data as possible.

The Raccoon info stealer exploited a vulnerability in the ChatGPT system and began collecting sensitive user data from infected computers. This data was then transmitted to the malware operators. Due to the rising popularity of ChatGPT, Group-IB has observed an uptick in the number of compromised accounts over the past year.

The stolen data, often referred to as ‘logs,’ includes information about the domains found in the log and the IP address of the compromised host. These logs, filled with the compromised personal data harvested by info stealers, are frequently traded on dark web marketplaces. In this case, the logs of ChatGPT accounts were discovered by Group-IB on one such marketplace, revealing the extent of the breach.

Adding to the severity of the situation, Group-IB reported a peak in the available malware logs containing compromised ChatGPT accounts. In May 2023, the number of such logs soared to an all-time high of 26,802. This spike in compromised accounts underlines the rapid escalation of the breach and the pressing need for effective countermeasures.

How did OpenAI respond?

OpenAI’s response to the breach has been multi-faceted. Given the surging popularity of ChatGPT, concerns around privacy have become increasingly prominent. In March, Italy banned the chatbot citing its alleged “unlawful collection of personal data” and the absence of age-verification tools. Additionally, Japan issued a warning to OpenAI, asserting that data collection should not occur without explicit user permission.

OpenAI had previously experienced a data breach on March 20, which led to the leak of conversation histories and payment information of users subscribed to its premium service. In response to the breach, OpenAI CEO Sam Altman expressed regret over the incident and assured that the problem had been rectified.

In an effort to enhance user privacy and security following the breach, OpenAI implemented an option for users to turn off their chat history. With this feature activated, conversations would be deleted after 30 days, although OpenAI would continue to monitor the information for signs of abuse. Should a user opt out of sharing their history, their data would not be used to train the chatbot further, the company clarified. These measures indicate OpenAI’s commitment to addressing privacy concerns and improving user data security.

How to protect your ChatGPT (OpenAI) account?

In order to mitigate the risks posed by such breaches, cybersecurity experts advise users to follow proper password hygiene practices. These include creating strong, unique passwords for each online account and updating them regularly. Utilizing a mix of characters, numbers, and symbols can enhance password strength and reduce vulnerability.

Moreover, users are strongly encouraged to secure their accounts with two-factor authentication (2FA). This added layer of security requires a second form of identification beyond just the password, such as a verification code sent to a user’s phone. By implementing 2FA, users can effectively prevent account takeover attacks, even if their primary login credentials are compromised.