In a world increasingly reliant on artificial intelligence (AI), regulatory standards are evolving to ensure the responsible use of such technologies. A recent study by Stanford researchers has revealed that the top ten AI language models might not meet the stringent requirements for safety, transparency, and fairness set by the European Union’s forthcoming AI Act. Non-compliance could lead to significant regulatory risks and potentially hefty financial penalties.In particular, the models were found to be susceptible to bias and could be used to generate harmful content.

What Did EU’s AI Act Say to Us?

The EU’s AI Act, approved on June 14th, is the first comprehensive set of regulations for AI in the world. It is designed to ensure that AI is used safely, responsibly, and ethically. As the first comprehensive set of AI regulations globally, it will impact over 450 million individuals and likely inspire other nations, such as the US and Canada, to develop their own AI regulations.

The study’s findings suggest that the EU’s AI Act could have a significant impact on the development and use of AI language models. If the models do not meet the regulations, they could face significant regulatory risks and potentially heavy financial penalties.

Despite recent clarifications exempting foundation models like GPT-4 from the “high-risk” AI category, generative AI models are still subject to several requirements under the AI Act. These include mandatory registration with relevant authorities and essential transparency disclosures, areas where many models currently fall short. The cost of non-compliance is steep, with fines potentially exceeding €20,000,000 or amounting to 4% of a company’s worldwide revenue.

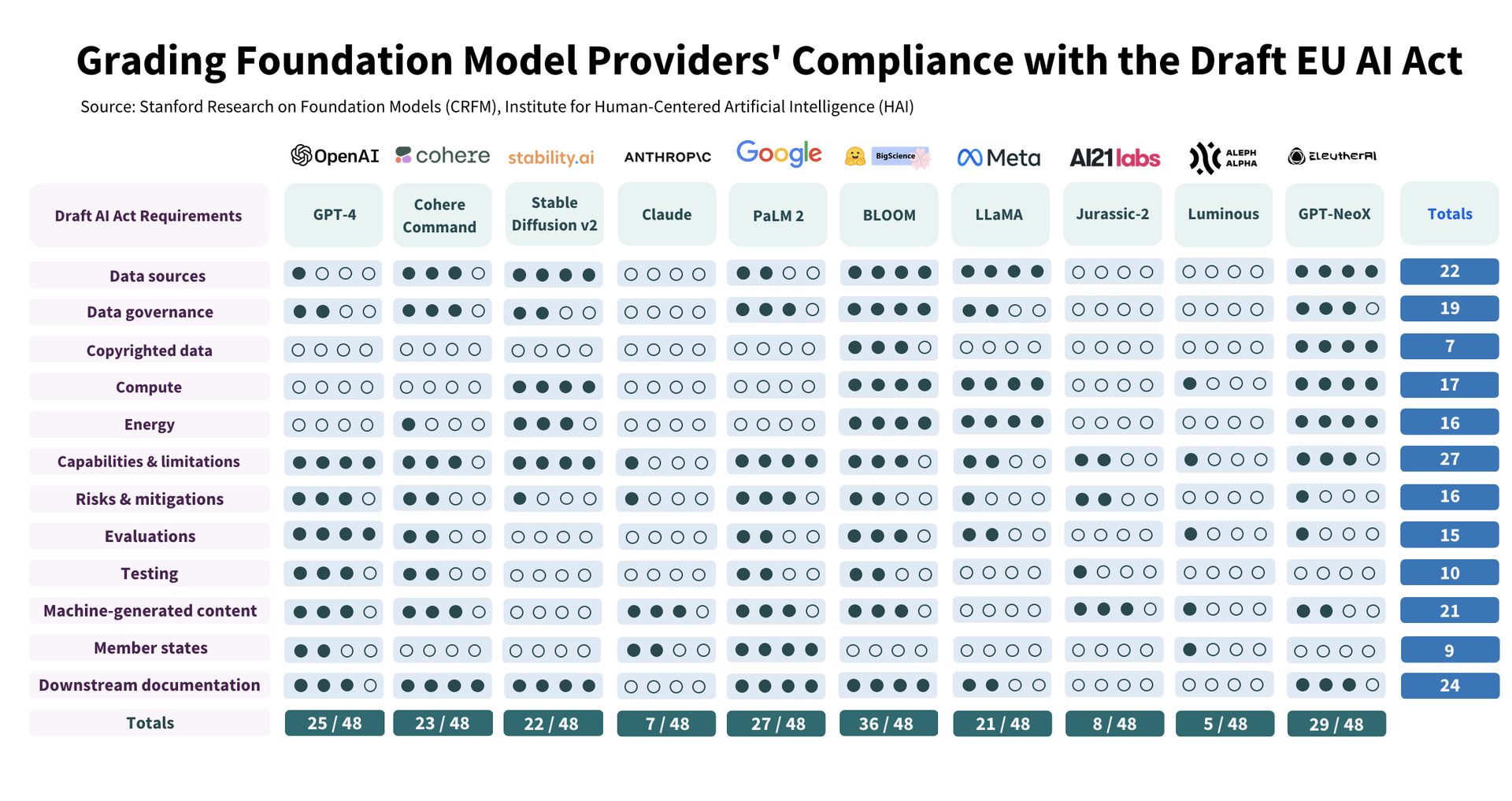

The Stanford study evaluated ten leading AI models against the draft AI Act’s 12 fundamental compliance requirements. The results were concerning, with most models scoring less than 50% in overall compliance. Even closed-source models like OpenAI’s GPT-4 only garnered 25 out of a possible 48 points.

Open-source vs Closed-source Models: A Comparative Analysis

The study revealed that open-source models generally outperformed closed-source models in several critical areas, including data sources transparency and resource utilization. However, closed-source models excelled in areas such as comprehensive documentation and risk mitigation. Despite these differences, the study highlighted significant areas of uncertainty, such as the “dimensions of performance” for complying with the AI Act’s numerous requirements.

The study’s authors call on the AI industry to take steps to ensure that their models meet the EU’s requirements.

They also call on the EU to provide further guidance on how the regulations will be enforced.

The Way Forward: Embracing the EU’s AI Act

Despite the challenges and uncertainties, the researchers advocate for the implementation of the EU’s AI Act. They argue that it could act as a catalyst for AI creators to collectively establish industry standards that enhance transparency and bring about significant positive change in the foundation model ecosystem.